Overview:

The Challenge: Companies using AWS Bedrock or Google Vertex AI for RAG solutions were getting hallucinations and inconsistent results—but had no visibility into the root cause. Was it the model? The prompts? The document parsing?

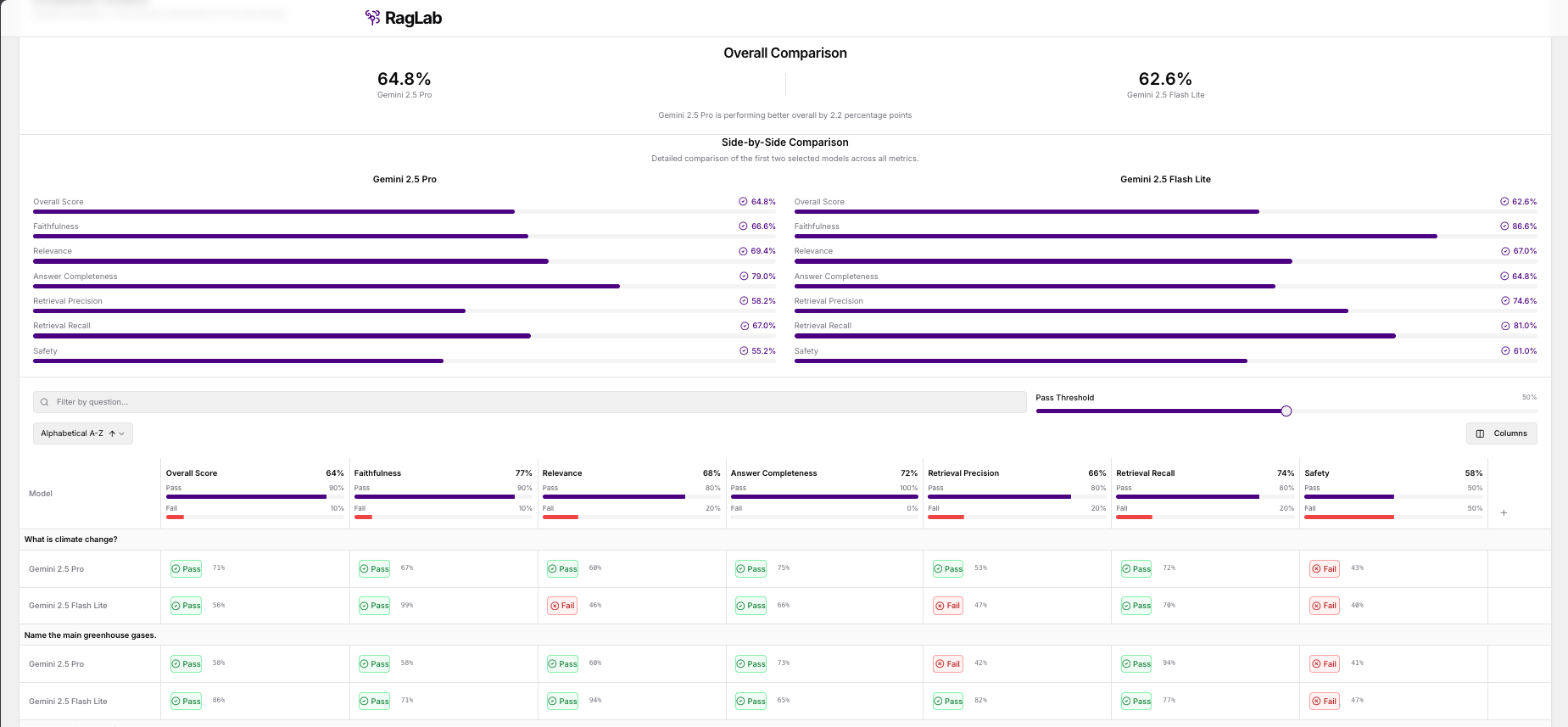

The Solution: A diagnostic platform that lets teams upload documents, test different LLM models and prompts, and compare outputs side-by-side to pinpoint exactly what's going wrong—before committing to an expensive production deployment.

Timeline

5 Day Build

2024

Industry

AI / Tech

Enterprise

Tech Stack

React / Python

AI Enabled

YES

Tools & Technologies

System Architecture

Diagnostic Interface

Debug mode active

Key Features

7 diagnostic modules designed to eliminate guesswork from your RAG implementation.

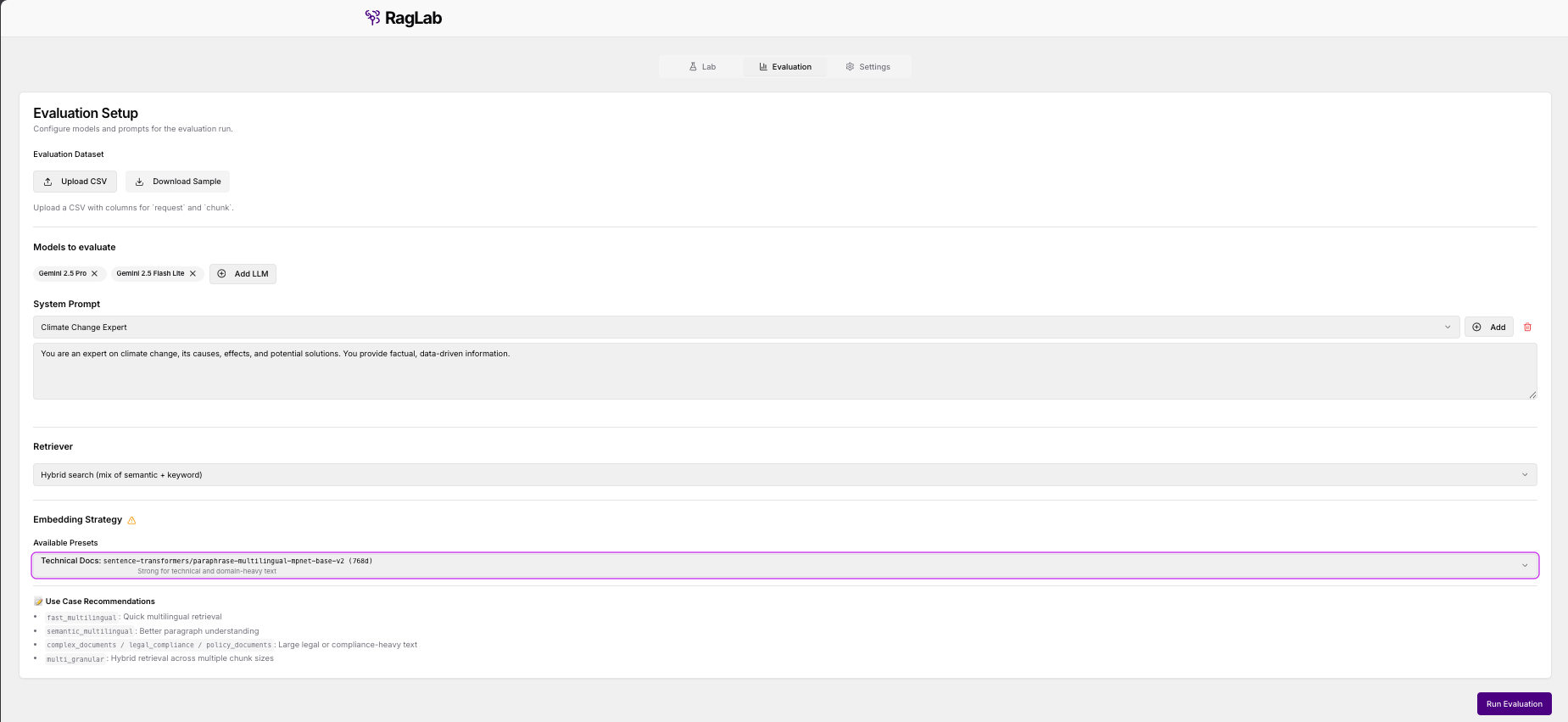

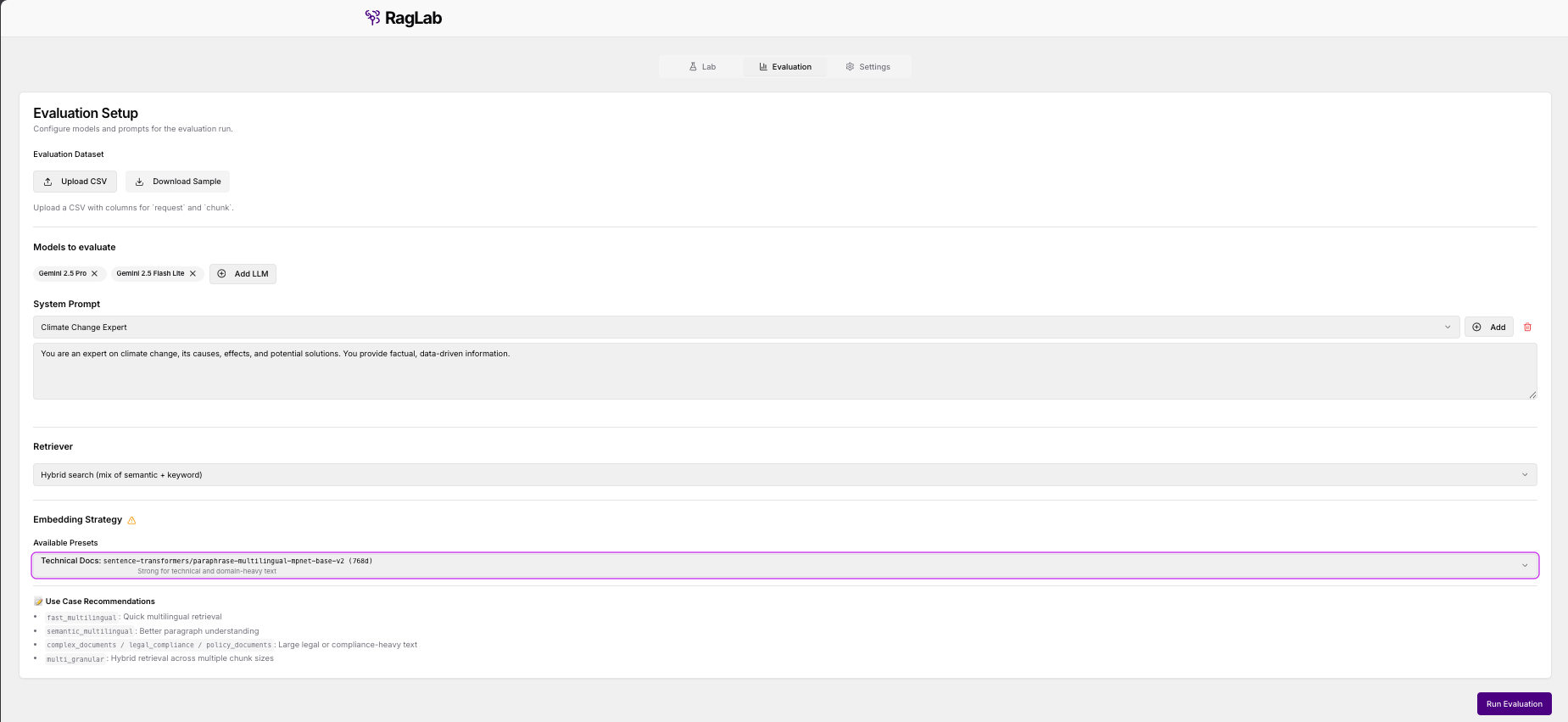

Model Selection

Compare GPT-4, Claude, Llama, and more side-by-side.

Prompt Testing

Iterate on system prompts in real-time with instant feedback.

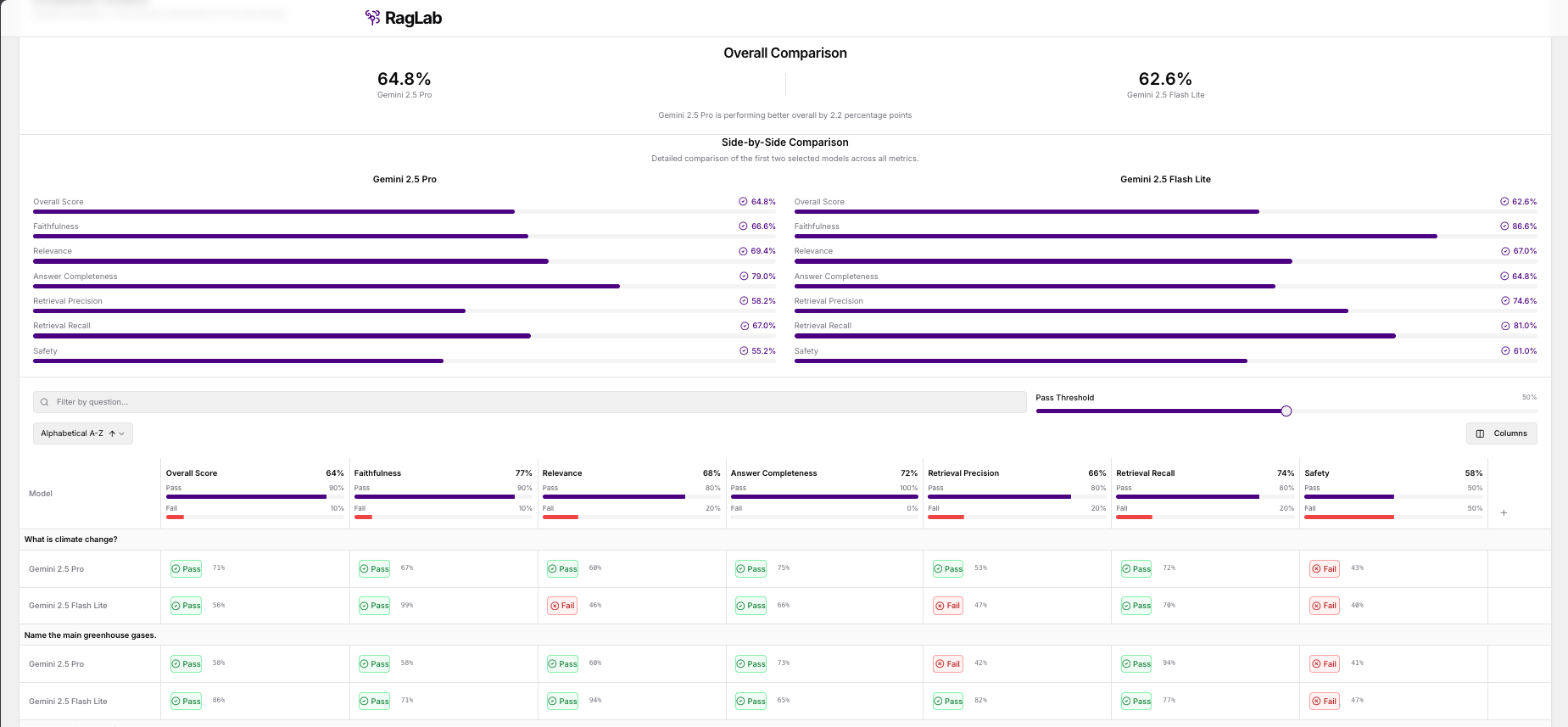

Side-by-Side

Compare model responses visually to spot differences.

Custom Thresholds

Define your own accuracy metrics and benchmarks.

Document Upload

Test against your actual knowledge base documents.

Eval Reports

Exportable analysis and recommendations for stakeholders.

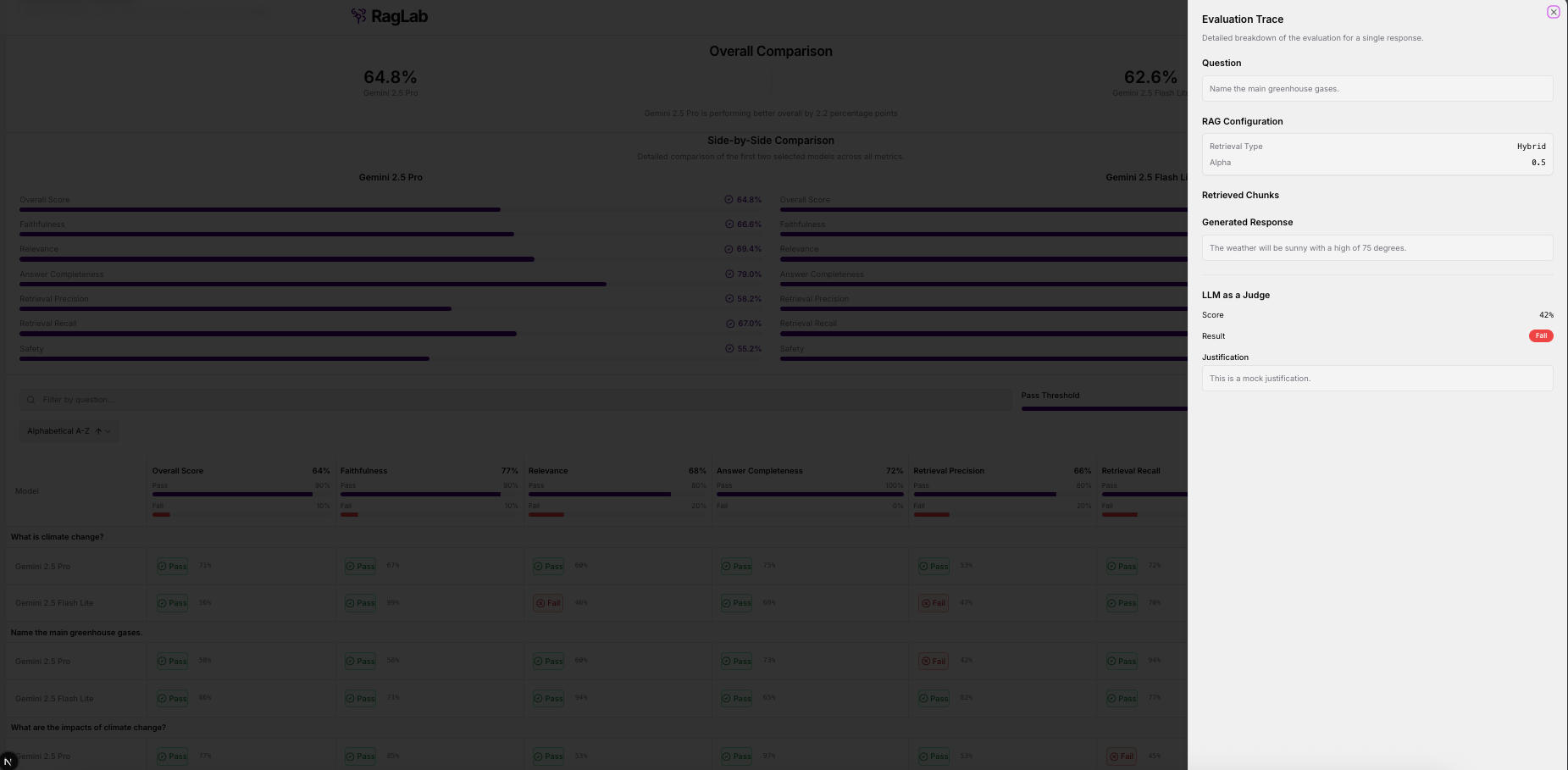

Tracing

Debug and trace model responses step-by-step.

Cost Analysis

Estimate token costs before committing to production.

Model Selection

Compare GPT-4, Claude, Llama, and more side-by-side.

Prompt Testing

Iterate on system prompts in real-time with instant feedback.

Side-by-Side

Compare model responses visually to spot differences.

Custom Thresholds

Define your own accuracy metrics and benchmarks.

Document Upload

Test against your actual knowledge base documents.

Eval Reports

Exportable analysis and recommendations for stakeholders.

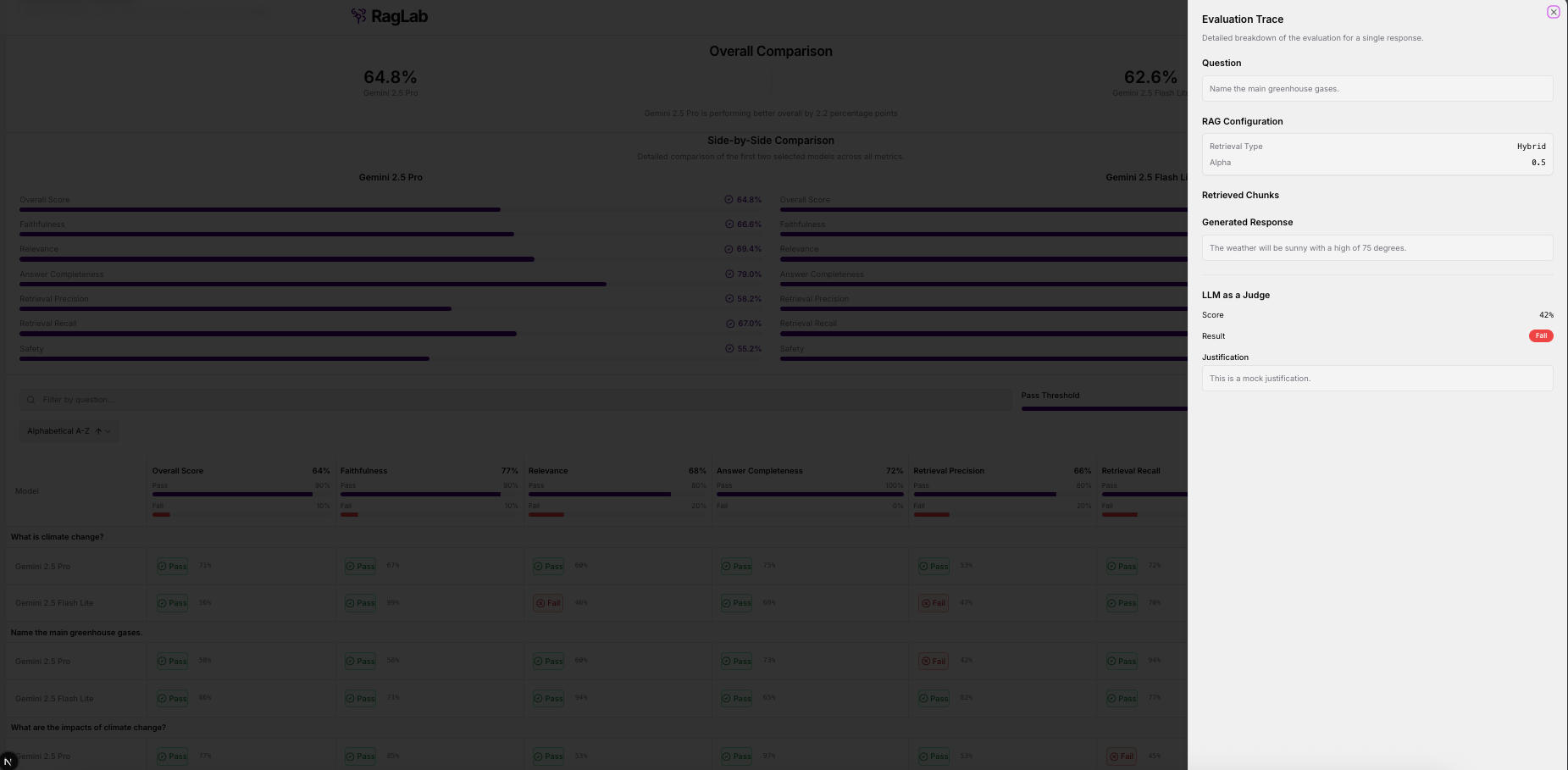

Tracing

Debug and trace model responses step-by-step.

Cost Analysis

Estimate token costs before committing to production.

Platform Walkthrough

Visual Interface GalleryLLM Selection

Choose from multiple language models and configure parameters like temperature, max tokens, and system prompts.

Test Configuration

Set up evaluation criteria, upload test documents, and define success metrics for your specific use case.

Fine-Tune Settings

Adjust model parameters and compare how different configurations affect output quality and accuracy.

Debug Mode

Step through the RAG pipeline to see exactly how documents are retrieved and how responses are generated.

Built for clarity.

This isn't another RAG tutorial. It's a production-grade diagnostic tool that saves enterprises thousands in wasted API calls and months of trial-and-error.

We finally understood why our RAG pipeline was hallucinating. Saved us from a costly production mistake.

— Enterprise AI Team